What Is Prompt Engineering?

A “prompt” is an input to a natural language processing (NLP) model. Typically, it contains user instructions that tell the model what kind of output is desired. The model analyzes the prompt and based on its training, produces an output text, commonly known as a “completion”. Prompts are not new, but have become especially important for the operation of large language models (LLMs) and other generative AI systems.

Prompt engineering involves crafting inputs that interact effectively with the AI model to generate desired outcomes. It is critical for leveraging the latest generation of AI systems, such as LLMs, AI chatbots, and image and video generation systems. Successful prompts guide the AI and provide sufficient context to allow it to respond appropriately to user inputs.

Related content: Read our guide to prompt engineering in ChatGPT.

Prompt engineering is a rapidly evolving field, and is practiced by a variety of roles, from individual users interacting with generative AI systems, to developers building novel AI applications. Successful prompt engineering requires an understanding of the model’s capabilities and the nuances of human language. A well-engineered prompt can improve an AI model’s performance and induce it to provide more accurate, better formatted, and ultimately more useful responses. This is part of an extensive series of guides about machine learning.

Why Is Prompt Engineering Important in Generative AI?

Prompt engineering offers several important benefits for generative AI projects:

- Provides context and examples: Generative AI models can produce text, images, or code based on the prompts they receive. Successful prompt engineering guides the model to produce a coherent and contextually relevant output. If a prompt is overly vague, or lacks important background information, the AI might generate irrelevant content, while prompts that are too specific could limit the model’s creativity.

- Easy adaptation to specific use cases: Prompt engineering is a relatively easy, cost effective way to adapt a generative AI model to specific uses, even if they were not originally considered by the model’s developers. For many use cases, prompt engineering is sufficient to achieve good results, avoiding the need for full model fine tuning, which is complex and computationally expensive.

- Supporting multi-turn conversations: In scenarios where generative AI needs to engage in multi-turn conversations, such as in customer service chatbots, prompts are designed to not only generate accurate initial responses but also to provide the full prior context of the conversation, and anticipate potential follow-up questions, to ensure the conversation flows logically and naturally.

Related content: Read our guide to prompt engineering guidelines (coming soon).

Types of Prompts

Large language models (LLMs) typically operate with three levels of prompts:

- Base prompts: These are the initial inputs provided to foundational AI models to understand general tasks or instructions. Base prompts establish the groundwork by defining the core objective or topic that the model will respond to. They cannot be modified by end-users, and have a crucial impact on model performance and safety.

- System prompts: System prompts are used to set overarching guidelines or behavior for an AI model in a particular session. They shape how the model should interact and respond by controlling aspects such as tone, style, and adherence to specific standards. For example, a system prompt might tell the LLM to act as a “friendly customer service representative” and say which types of questions it should answer.

- User prompts: User prompts are the direct instructions or queries provided by end-users. These often seek specific answers or actions, ranging from simple questions to intricate tasks. As the context windows of generative AI models grow, user prompts can increasingly include large amounts of text, or even images and videos, which provide context for the user’s request.

6 Prompt Engineering Techniques

The following prompt engineering techniques are recommended by OpenAI for use with its GPT family of large language models, however they are also applicable to other LLMs. They are described in detail in a free course created by OpenAI and AI pioneer Andrew Ng. The examples shown below are taken from the OpenAI prompt engineering guide.

Write Clear Instructions

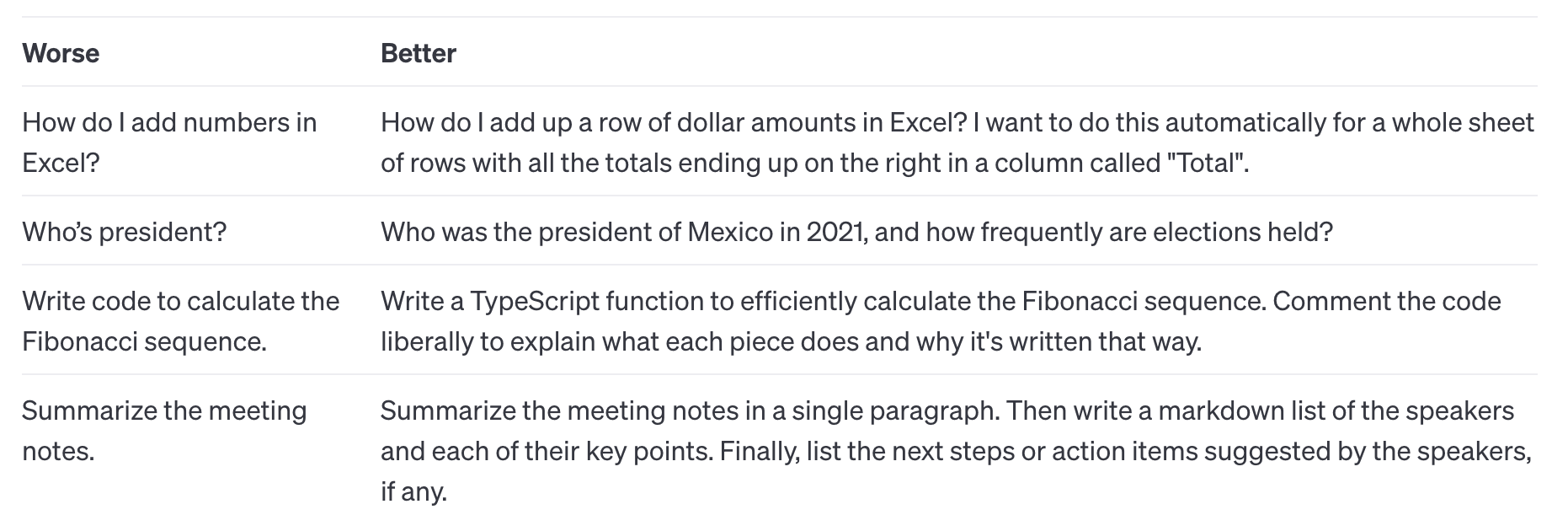

When creating prompts for large language models, clarity in the instructions is crucial to obtaining useful outputs. If the desired outcome is a concise response, explicitly request brevity. For more complex or expert-level responses, specify that the format should reflect this level of expertise. Demonstrating the preferred format with examples can also guide the model more effectively. The clearer the instructions, the less the model needs to infer, increasing the likelihood of achieving the desired result.

The following table shows examples of prompts which do not contain enough information and how they can be improved to better guide the model.

Image credit: OpenAI

Provide Reference Text

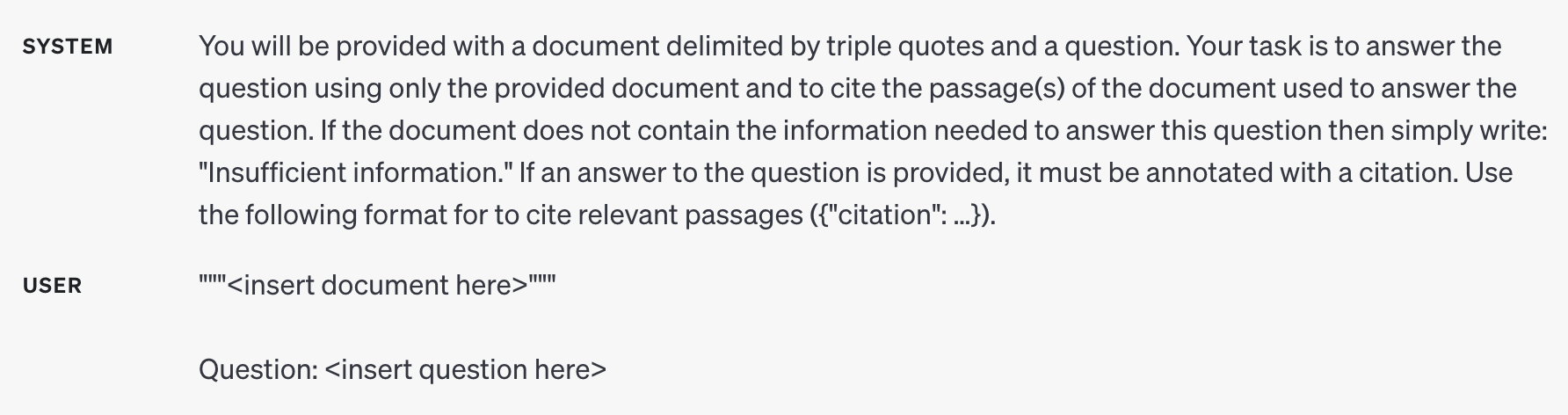

Using reference texts when crafting prompts for language models can significantly enhance the accuracy and relevance of the output, especially in fields that require precise information. This approach is akin to providing a student with notes during an exam; it guides the model to deliver responses based on factual information rather than making uninformed guesses, particularly in specialized or niche topics. By directing the model to use the provided text, the likelihood of fabricating responses is reduced, promoting more reliable and verifiable outputs.

Below is an example of a system and user prompt that instructs the LLM to cite the specific passages from a source used to answer the question:

Image credit: OpenAI

Split Complex Tasks Into Simpler Subtasks

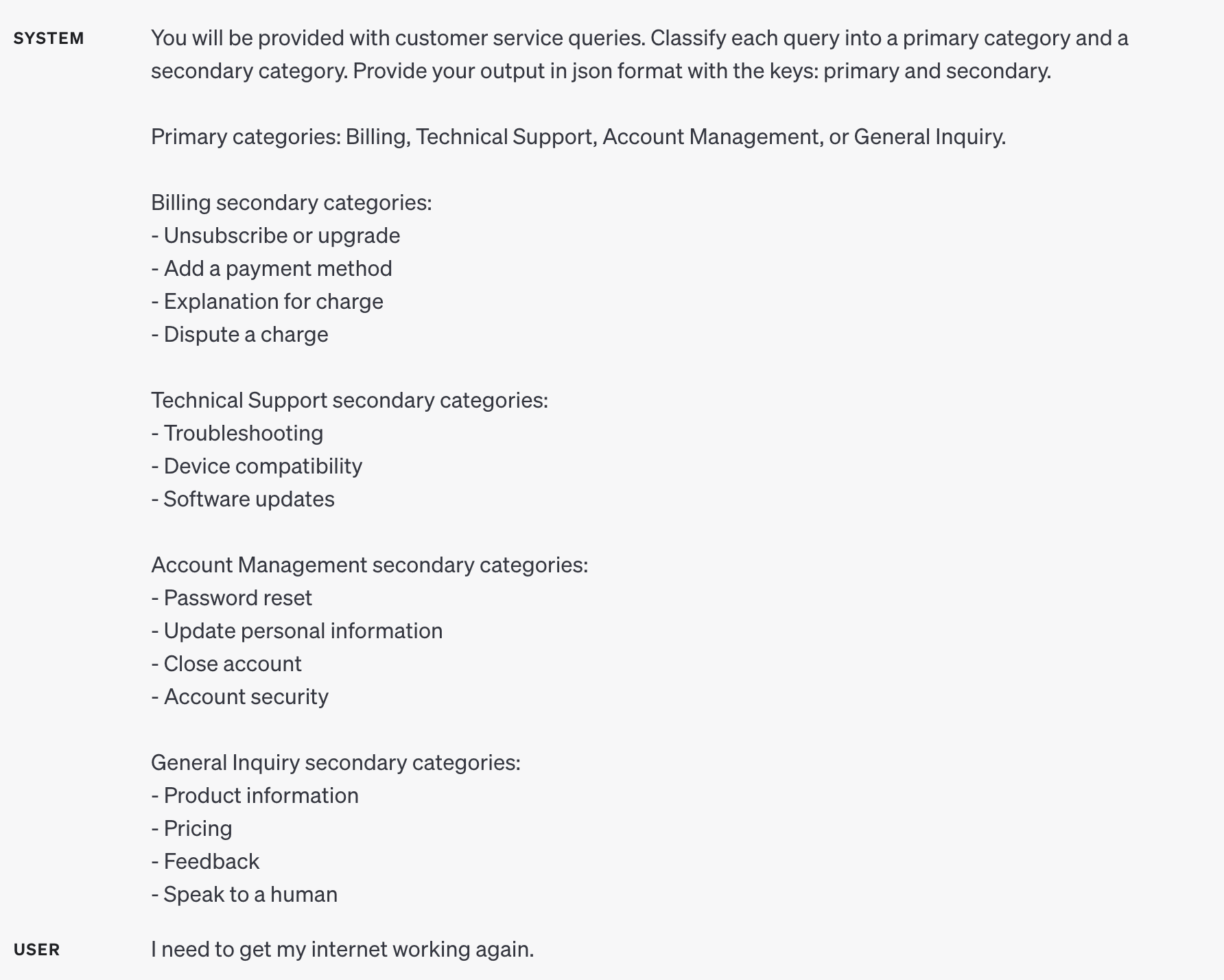

Complex tasks often result in higher error rates and can be overwhelming for the AI. By breaking a complex task into simpler, manageable parts, the model can handle each segment with greater accuracy. This method is akin to modular programming in software engineering, where a large system is divided into smaller, independent modules. For language models, this could involve processing a task in stages, where the output of one stage serves as the input for the next, thereby simplifying the overall task and reducing potential errors.

Below is an example showing how a model can first categorize user questions into categories, and then a different prompt can be used to process each type of question.

Image credit: OpenAI

Give the Model Time to “Think”

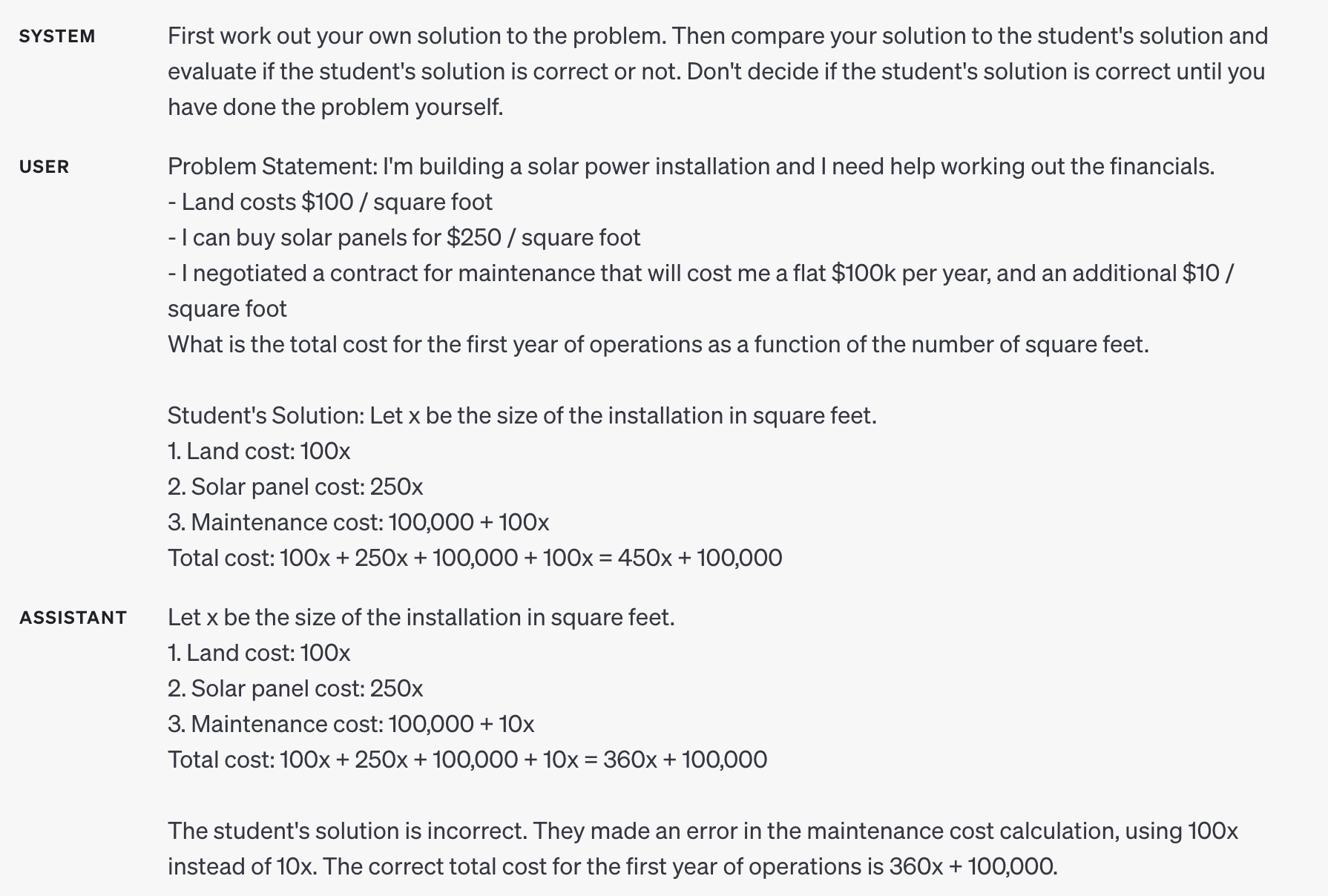

Allowing the model time to “think” or process information can lead to more accurate and thoughtful responses. Encouraging a model to perform a ‘chain of thought’ process before arriving at a conclusion can mimic the human problem-solving process, enhancing the reliability of the responses. This approach is particularly useful in complex calculation or reasoning tasks, where immediate answers may not be as accurate. This strategy encourages the model to use more compute to provide a more comprehensive response.

Below is an example of a prompt that encourages the model to work out its own solution to a problem before evaluating if the user’s solution is correct or not.

Image credit: OpenAI

Use External Tools

To compensate for the inherent limitations of language models, integrating external tools can enhance their performance. For example, using a retrieval-augmented generation system can provide the model with access to additional relevant information beyond its training data. Similarly, tools like a code execution engine can aid the model in performing calculations or executing code more accurately, thus expanding the practical applications of AI in problem-solving scenarios.

Test Changes Systematically

When modifying prompts, systematic testing is essential to ensure that the changes lead to improvements. Never assume that the model understands the prompt or responds correctly to all the elements in a long prompt. Test elements one by one to determine which ones the model actually reacts to, and whether it correctly understands the instructions.

Prompt Engineering Use Cases

Here are some of the primary use cases where prompt engineering can have a major impact.

Chatbots

Prompt engineering plays a critical role in guiding conversations and ensuring relevance. By carefully crafting prompts, engineers can direct the flow of interactions, keeping chatbots on-topic and able to handle complex customer queries effectively. This results in enhanced user experience and faster resolution of user issues.

Tailored prompts can enable chatbots to handle a diverse range of scenarios, from customer support to engaging in casual, natural-sounding banter. This adaptability is useful for developing chatbots that serve various purposes across different industries, from eCommerce to entertainment and even finance and healthcare.

Content Creation

Prompt engineering significantly improves content generation with generative AI by providing clear, targeted instructions. A common approach is to provide a clear structure as part of the prompt to guide content generation and improve relevance. Prompts can also specify a desired style or tone that matches user requirements.

A critical aspect of prompt engineering in content creation is accuracy. LLMs can “hallucinate” and produce text that includes convincing yet incorrect information. Advanced prompting techniques can provide background information or instruct the model to check its work, reducing the risk of hallucinations.

Software Development

Prompt engineering significantly impacts software development, especially in automated coding and code review processes. By providing detailed, context-rich prompts, developers can guide AI tools to generate more accurate and functionally relevant code snippets. This reduces the manual coding workload and speeds up the development cycle.

Additionally, prompt engineering ensures that AI tools understand the tasks at hand, whether it’s bug fixing, generating new code, or even refactoring existing code. By aligning the AI’s output with the engineers’ needs, it can significantly improve developer productivity.

Image Generation

In image generation, prompt engineering allows for the creation of vivid, detailed images from textual descriptions alone. This capability is useful in industries such as design and media, where custom content creation can be time-consuming and costly.

In educational settings, image generation via precise prompts helps in creating visual aids and simulations that enhance learning and comprehension. By tailoring the prompts, educators can generate highly specific images that align perfectly with their teaching objectives, making abstract concepts more accessible to students.

Related content: Read our guide to prompt engineering examples.

The History of Prompt Engineering

Prompt engineering recently became mainstream, but is not a new practice. Here’s an overview of how prompt engineering evolved with the emergence of various types of AI models.

Traditional NLP

Natural Language Processing (NLP) is the field of designing machines that understand human language. Initially, NLP relied heavily on rule-based systems where machines processed text based on a set of pre-programmed rules. These systems were rigid and often failed to handle the nuances of language effectively.

At this stage, prompt engineering involved feeding text to a system to ease processing and analysis, for example breaking it into words or adding descriptive tags.

Statistical NLP and Machine Learning

As NLP evolved, there was a shift towards statistical methods, which involve analyzing large amounts of text and learning from the patterns. This approach allowed for more flexibility and adaptability in handling various linguistic features and contexts.

Statistical NLP transitioned into machine learning models, which automatically adjust their algorithms based on the input data. Instead of relying on predefined rules, these models learn to predict text patterns, making them more effective at understanding and generating language. This shift significantly increased the flexibility and accuracy of AI responses.

At this stage, prompt engineering involved fine-tuning of training data and user responses to provide the precise format expected by models. For example, the Google search engine strips stop words like “the”, or symbols like hyphens, from search queries, and automatically adds synonyms to the query before processing it.

Transformer-Based Models

Transformer-based models have changed how NLP tasks are performed, introducing the ability to process words in relation to all other words in a sentence simultaneously. This innovation allows for a deeper understanding of context, which is useful for generating relevant and coherent outputs from prompts.

These models employ self-attention mechanisms that weigh the relevance of all words in a text when generating a response. Modern large language models like OpenAI’s Generative Pre-trained Transformer (GPT) series, Google Gemini, and Meta LLaMA, are examples of how transformers enable surprisingly advanced and nuanced understanding of human language.

Prompt engineering helps in harnessing the full potential of these models. Through effective prompts, models like GPT can produce text that is contextually appropriate as well as creative and engaging.

The development of LLMs based on the transformer architecture has also highlighted the importance of iterative testing in prompt engineering. By continually refining prompts based on output quality, engineers can enhance the model’s performance and utility in various applications, from creative writing aids to automated customer support systems.

The Role of a Prompt Engineer

There is growing demand for full-time employees that engage in prompt engineering (see a recent review by Fast Company). A prompt engineer’s role revolves around understanding the nuances of language and the technical requirements of AI models to create prompts that lead to desired outcomes. They act as the translators between human intent and machine interpretation, ensuring that the AI performs tasks correctly and efficiently.

Beyond crafting prompts, these professionals analyze responses, refine input strategies, and continually optimize prompts based on feedback and evolving needs. Their work is critical in environments where AI interactions need to be precise, such as in automated customer service, content generation, and more sophisticated AI applications like diagnostic systems or personalized learning environments.

However, some experts claim that prompt engineering may be important at the current stage of generative AI development, but will become less important in the future. For example, Harvard Business Review posits that prompt engineering will be replaced by broader practices like problem decomposition, problem framing, and problem constraint design.

Related content: Read our guide to prompt engineering for developers (coming soon)

Prompt Engineering Approaches: From Basic to Advanced

Here are several general approaches to prompt engineering, from the most basic zero-shot or few-shot prompting, to advanced methods proposed by machine learning researchers.

Zero-Shot Prompting

Zero-shot prompting is a technique where an AI model generates a response based on a single input without any previous examples or training specific to that task. This approach is useful when dealing with new or unique scenarios where historical data is unavailable. The effectiveness of zero-shot prompting depends heavily on the model’s general training and its ability to apply broad knowledge to specific questions or tasks.

The challenge with zero-shot prompting is in crafting prompts that are sufficiently informative and clear to guide the AI’s response, despite it having no prior examples to learn from. Engineers must understand the model’s capabilities deeply to predict and manipulate its behavior effectively under these constraints.

Few-Shot Prompting

Few-shot prompting involves providing the AI model with a small number of examples or “shots” to help it understand the context or task before asking it to generate a response. This technique balances the need for some guidance without extensive training data. By demonstrating how similar problems are approached, the AI can generalize from these few examples to new, related tasks.

For example, by giving an AI model three examples of email responses to customer inquiries, few-shot prompting aims to equip the model to generate a suitable response to a new customer email. This method is particularly useful in scenarios where data is scarce but a moderate level of specificity and adaptation is required.

Chain-of-Thought Prompting

Chain-of-thought prompting is designed to encourage an AI to “think out loud” as it processes a prompt, thereby revealing the intermediate steps or reasoning behind its conclusions. This technique is valuable for complex problem-solving tasks where simply providing an answer is insufficient. It helps ensure the AI’s output is not only correct but also justifiably logical.

By structuring prompts to include a request for step-by-step reasoning, engineers can guide AI models to articulate their thought processes, which enhances transparency and trust in AI-generated solutions, particularly in educational and technical fields where understanding the reasoning is as important as the answer itself. Multiple studies have shown that chain-of-thought reasoning can dramatically improve the accuracy of LLM responses to complex questions.

Generated Knowledge Prompting

Generated knowledge prompting is used to push AI models to synthesize information or create new insights based on a combination of provided data and learned knowledge. This is crucial for tasks that require innovation and creativity, such as developing new scientific hypotheses or proposing unique solutions to engineering problems.

The key to successful generated knowledge prompting is to formulate questions that stimulate the AI to go beyond mere regurgitation of facts, encouraging it to explore possibilities and generate novel ideas. This requires an intricate understanding of both the subject matter and the model’s capabilities to handle creative tasks.

Tree-of-Thought Prompting

Tree-of-thought prompting guides the AI to explore multiple potential solutions or pathways before arriving at a final decision. This method is particularly effective for tasks with multiple valid approaches or solutions, such as strategic game playing or complex decision-making scenarios. By encouraging the AI to consider a range of possibilities, it can more comprehensively evaluate the best course of action.

Prompts designed for this technique require the AI to outline various scenarios or decision trees, assessing the pros and cons of each before making a recommendation. This enhances the decision-making quality while providing users with insights into the different options considered by the AI.

Directional-Stimulus Prompting

Directional-stimulus prompting focuses on directing the AI’s attention to specific elements of the input or desired outcome. This method is used to enhance the relevance and accuracy of AI responses, especially in fields like medical diagnosis or legal analysis, where focusing on the right details is crucial.

Effective directional-stimulus prompts specify which aspects of the input should be prioritized, guiding the AI to concentrate its processing on these areas. This helps ensure that the AI does not overlook critical information, leading to more precise and applicable outputs.

Least-to-Most Prompting

Least-to-most prompting involves structuring prompts to gradually increase in complexity or specificity. This technique is particularly useful in educational settings or when training AI to handle progressively challenging tasks. It helps the AI build its responses step-by-step, starting with simple concepts and gradually integrating more complex elements.

By scaffolding the information in this way, least-to-most prompting supports more effective learning and adaptation by the AI, allowing it to develop a deeper understanding of the subject matter or the task requirements as it progresses through the prompts.

Graph Prompting

Graph prompting integrates knowledge graphs into the prompting process of large language models (LLMs) to refine the relevance and quality of responses. The process starts with subgraph retrieval, which converts user input into a numerical form to identify relevant entities and their neighboring connections within the graph. This subgraph is then refined through a graph neural network (GNN), ensuring that the retrieved graph information is contextually relevant to the user input.

After refining the graph-based embeddings, the system uses them to prompt the LLM for answering user queries. This “soft” graph prompting method implicitly guides the LLM with graph-based parameters, offering improved results compared to traditional “hard” prompts. Experimental results show significant improvements in commonsense reasoning tasks, demonstrating that graph prompting can unlock new levels of contextual understanding in LLMs.

Try it all out in GPTScript today! Get started at gptscript.ai.

See Additional Guides on Key Machine Learning Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of machine learning.

Auto Image Crop

Authored by Cloudinary

- Auto Image Crop: Use Cases, Features, and Best Practices

- 5 Ways to Crop Images in HTML/CSS

- Cropping Images in Python With Pillow and OpenCV

Fine Tuning LLM

Authored by Acorn

- Fine-Tuning LLMs: Top 6 Methods, Challenges & Best Practices

- Parameter-Efficient Fine-Tuning (PEFT) Basics & Tutorial

- Fine-Tuning Llama 2 with Hugging Face PEFT Library

Anthropic Claude

Authored by Acorn