GPTScript v0.8 is out and it’s a big one! This release introduces big upgrades to our chat-based user experience, and makes building AI-powered assistants, chatbots, copilots and agents significantly simpler. This reflects an evolution in GPTScript to be more chat-friendly. While you can still write fully (or semi) automated scripts, we’re putting more effort into the chat experience because we believe it’s the best way to unlock the full value of integrating AI with your systems. Without further ado, let’s dive into these changes.

Note: If you’re interested in a primer on writing chat-based GPTScripts, checkout our examples.

A New Terminal UI

We’ve introduced a new streamlined terminal UI for chat-enabled GPTScripts. Here’s a shot of it in action:

A few key features to highlight:

- You’ll now be prompted when your script wants to call tools and perform actions. Any calls to the built-in system tools (which can modify your local system) prompt as well as any external tools (ie: Tools: github.com/gptscript-ai/image-generation).

- If your script defines multiple agents, you’ll be able to see exactly which one you’re talking to.

- This experience hides a lot of debug information compared to previous UX. You can get back to the old experience by passing the –disable-tui flag.

It’s fairly easy to write chat assistants that integrate with your existing CLIs and APIs

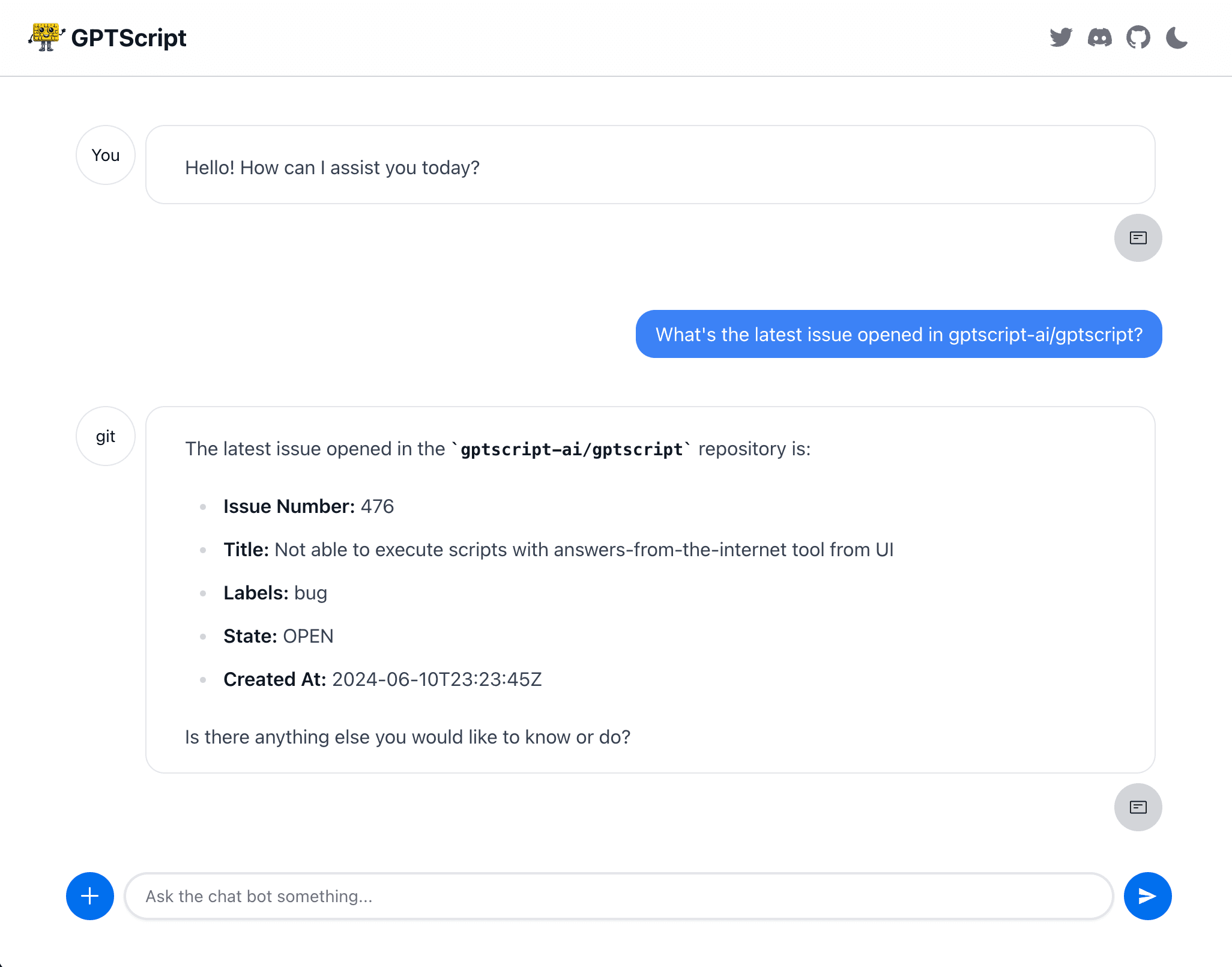

A Browser-based UI

Terminal isn’t your thing? You can now launch your chat-based GPTScripts in the browser. To use this feature, you just need to add the –ui flag to a chat-enabled script, like so:

gptscript --ui github.com/gptscript-ai/llm-basics-demo

Here’s a screenshot of the browser-based chat experience:

We think this experience will be great for folks who are more comfortable in the browser than the terminal. It has the same feature set as the terminal UI including prompting for permission and showing you exactly which tool you’re chatting with. It also has a very handy debugging UI that shows the "stack trace" of each chat interaction – including which tools were called and what was sent to and from the LLM.

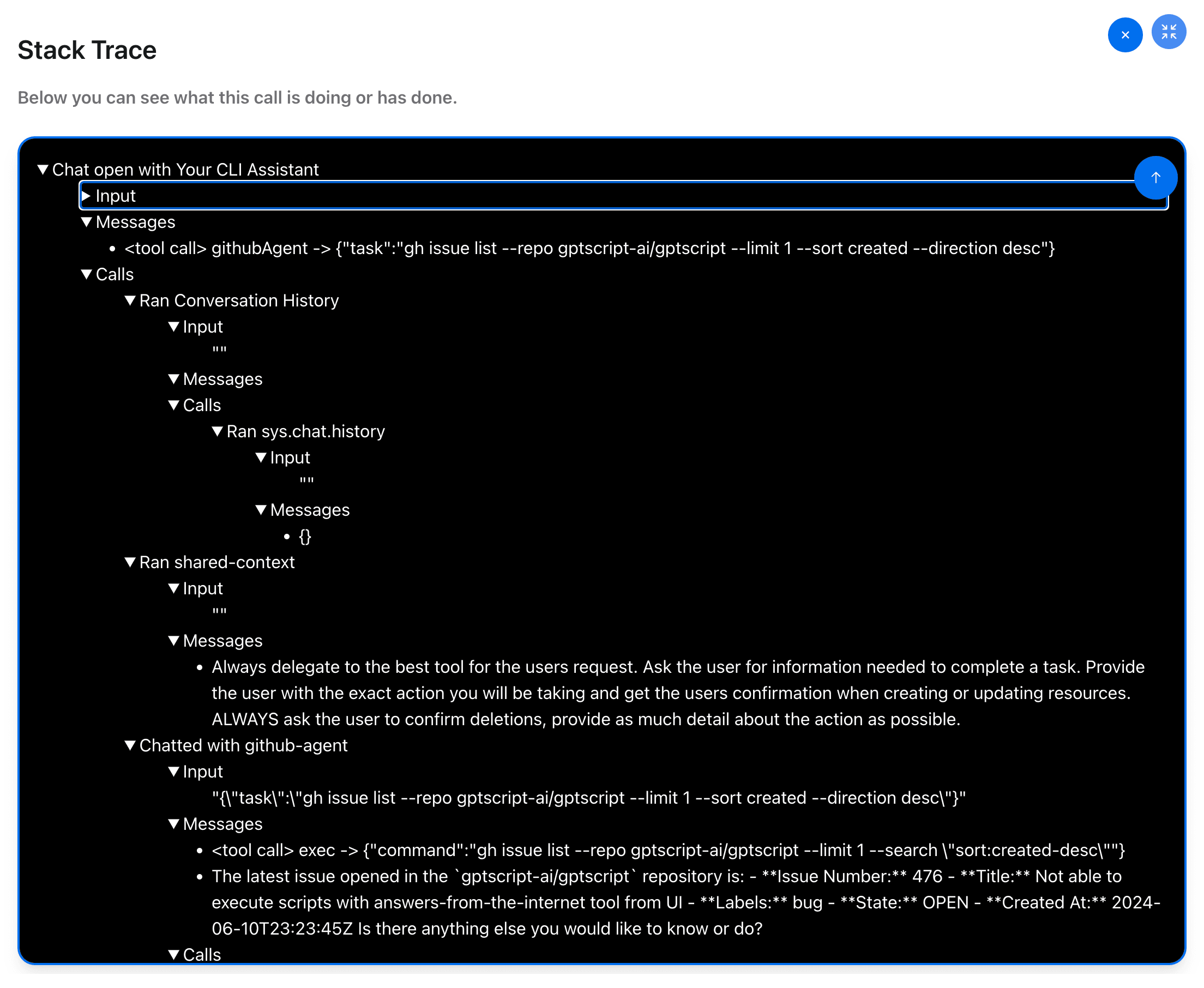

Here’s that view in action:

This view can give you great insight into how your scripts are arriving at the answers and actions being taken and help you to improve and refine them. We feel it’s invaluable in understanding exactly how an LLM can interact with tools and systems.

New "Agents" Field

Now when writing a tool, you can specify which "agents" (chat-enabled tools) it has access to using the Agents stanza, like so:

Name: Your CLI Assistant

Description: An assistant to help you with local cli-based tasks for GitHub and Kubernetes

Agents: k8s-agent, github-agent

Chat: true

...

For this example in full, see here.

The agents specified in this way become part of a group can hand-off to each other. So in the above example, this let’s you ask a GitHub question, then a Kubernetes question, and then a GitHub question again and the chat conversation will get transferred to the proper agent each time. You could achieve similar functionality in the past, but it required much more boilerplate and setup in your script.

Wrapping Up

In addition to these marquee features, we coupled this release with an overhaul of our docs site, which includes several practical examples for building your own assistants. The release also includes dozens of other fixes and improvements, which you can review in the changelog.

This release is just a first step towards improving the user experience of GPTScript. Soon you’ll see a browser based UI for authoring GPTScripts, improved Knowledge/RAG tools, and much more. We’d love to hear your feedback via Discord or GitHub.